"VerQAG" Device:

“Self-Healing through Self-Entertaining”

"VerQAG” device, subject to patent registration under the same trade-name, is a head-mounted visioning device that incorporates a slot for

inserting a smartphone, built-in electronics for operating

specifically-functioning lenses equipped with a set of tinted detachable

color-filters (red-cyan, yellow-cyan, red-green) that causes the emulation of

user’s spatial immersion into pseudo-self-presence 3D virtual reality environment

conditioned on observing either “Quads-art” graphic or “Qualified Associative Graphics” be screened at smartphone display.

"VerQAG” device, subject to patent registration under the same trade-name, is a head-mounted visioning device that incorporates a slot for

inserting a smartphone, built-in electronics for operating

specifically-functioning lenses equipped with a set of tinted detachable

color-filters (red-cyan, yellow-cyan, red-green) that causes the emulation of

user’s spatial immersion into pseudo-self-presence 3D virtual reality environment

conditioned on observing either “Quads-art” graphic or “Qualified Associative Graphics” be screened at smartphone display.

As a binocular multimedia content

observing device, “VerQAG” device may be, to a certain extent, compared with a Virtual Reality,

however, it differs a lot.

Nevertheless, the technology of making specifically designed graphics causing “Bi-Visual Fluctuations Perception” phenomena may be adapted for and

adopted by already-existing VR-technology.

It’s necessary to emphasize that “VerQAG” device is not intended for “Virtual Reality" game-like experiences as it’s understood in terms of common parlance.

“A medical device is any instrument, apparatus, implant, material or other similar or related article, used alone or in combination, including accessories and software necessary for proper application, intended by the manufacturer for use with humans for (a) diagnosis, prevention, monitoring, treatment or alleviation of disease (b) investigation, replacement or support of the anatomical structure or a physiological process”.

“A medical device is any instrument, apparatus, implant, material or other similar or related article, used alone or in combination, including accessories and software necessary for proper application, intended by the manufacturer for use with humans for (a) diagnosis, prevention, monitoring, treatment or alleviation of disease (b) investigation, replacement or support of the anatomical structure or a physiological process”.

Initially designed for healthcare market “VerQAG” device is normally used for practicing so-called “self-healing through self-entertaining” concept-developed and phenomena-discovered therapies such as -

Initially designed for healthcare market “VerQAG” device is normally used for practicing so-called “self-healing through self-entertaining” concept-developed and phenomena-discovered therapies such as -

“VerQAG” device is basically to be -

1) considered as a “low-cost” self-care tool that might be used in a brain-control interface technology-approbative sector,

2) employed in a diversity of care-giver training programs addressing mental health, psychological and vision impairments issues,

3) applicable in Brain-Harmonization Frequency-Based NeuroTechnology experimental researches.

VerQAG:

Helping people acquire New 3D-Experience In Binocular & Color Vision

Theoretically supposed, the usage of “VerQAG” device may help “brain” to re-learn a depth and colors perception so stereo-blind and color-blind people could become stereo/color acute by practicing specifically designed training-based programs on a regular basis.

If you are a stereo-blind or color-blind individual, or a person who is capable of seeing 3D-effect but finding it uncomfortable and irritating, you are welcome to –

(1) Join “Doubled Objects Stereo Abstracted Vision EntertainmentTherapy” Program designed for finding the most effective way of utilizing “Bi-Visual Fluctuations Perception Phenomenon” addressing allowing people, who have certain visual perception deprivations and disorders, to watch movies or any multimedia content on mobile phones (Smartphone) with a usage of “VerQAG” device;

(2) Take a part in "EL DIO" PROJECT that is targeted to evidence the effectiveness of Anaglyph Binoculars Graphics training-based practices of using “VerQAG” device.

A prototype-pattern of “VerQAG” device has been examined for certificating requirements of the Panamanian national authorizing legislation (Ley No. 1 de 10/01/2001: Sobre Medicamentos y otros Productos para la Salud Humana, Decreto Ejectuvio No. 248, 3 June 2008), transposing of the Art. 2. of Decreto Ejecutivo No. 468, on medical devices, and been defined in conformity with “Medical Device Technical Criteria” as:

“A medical device is any instrument, apparatus, implant, material or other similar or related article, used alone or in combination, including accessories and software necessary for proper application, intended by the manufacturer for use with humans for (a) diagnosis, prevention, monitoring, treatment or alleviation of disease (b) investigation, replacement or support of the anatomical structure or a physiological process”.

“A medical device is any instrument, apparatus, implant, material or other similar or related article, used alone or in combination, including accessories and software necessary for proper application, intended by the manufacturer for use with humans for (a) diagnosis, prevention, monitoring, treatment or alleviation of disease (b) investigation, replacement or support of the anatomical structure or a physiological process”. For further identification of the product covered, see the Medical device classification Categories:

Class A, B, C, and D, Decreto Ejecutivo No. 468, Art. 18. Classification rules are detailed in Decreto Ejecutivo No. 468, Appendix No. 6.

VerQAG:

Decoding Dedicated Acronyms

There

are two abbreviating variations for the acronym of “Ver”, each of which applies to certain graphic-making technology dedicated to creating and delivering of specifically designed visual content; thus “Ver” stands for both “Virtual emulated-reality” and “Virtually-emulated reality”. In combination of these two

terms the acronym for “Ver” written in capital letters "VER" is also to be decoded as “Virtual Emulated Reality”.

The definition of the “Virtual Emulated Reality" applies to the technology, abbreviated as “VER-T.ECH”, in which “ECH” is a shortening (first three letters) of words “echelon” and “echo”.

The

notion of “Virtually-Emulated Reality” mostly applies to in-cloud emulation of

virtual computing of visual content representing graphical environment of “Virtual Emulated-Reality”.

The

notion of “Virtual Emulated-Reality” may be generally applied to the

audiovisual content environment that is -

a) created by means of computing

virtualization and emulation,

b) to be perceived, or better to say

observed, with the usage of “VerQAG” device.

Together with this,

the term of “Virtual Emulated-Reality” defines the dream-experiencing

environment which might be normally perceived during the sleep-like state (SELF-HYPNOSIS MEDITATIVE SLEEP STATE) that may be compared and associated

with a dream-like meditation conditioned on immersion into alternate states of

mind.

“Virtual Emulated-Reality” technology is to be applied for creating and displaying Anaglyph Binoculars Graphics to cause “Anaglyph-Sync” visual perception effect of “Bi-Visual Fluctuations Perception Phenomenon”, proposes the alternative implications for stereo-blind and color-blind consumers left behind by modern 3D and VR technologies for mobile phones.

VER-T.ECH basically proposes

improving-usability and increasing-adoption of stand-a-chance affordable mobile applications of Virtual Emulated Reality that is based on –

(1) employing “VerQAG” device at

cross-platform implementing innovative users' local-host networking interaction in-cloud virtualization-emulation environment;

(2) delivering location-based

multimedia content and experiences along with new tracking “Inside Out”

biofeedback for “self-healing through self-entertaining” training-based

programs and therapies such as for instance, “SELF-HYPNOSIS MEDITATIVE SLEEP-THERAPY” and “Doubled Objects

Stereo Abstracted Vision Entertainment Therapy”.

The

abbreviation of “QAG” stands for both "Quasi-Anaglyph Graphic" and “Qualified

Associative Graphics”.

The notion of “Qualified Associative Graphics” applies to specific graphic used for practicing task-performing audiovisual-stimuli-conditioned

exercises, so-called “AudioVisual Synergy-Synesthesia

Charactery Stimulation”, with a usage of “Ver-QAG” device.

AudioVisual Synergy-Synesthesia

Charactery Stimulation is a core of “AudioVisual

Synergy-Synesthesia-Synchronization Compositing Frequency-based NeuroTherapy” (“AV3SynC-NeuroTherapy”)

that, to a certain extent, results in experiencing of Autonomous

Sensory Meridian Response (ASMR).

AV3SynC-NeuroTherapy is a training-based program consisting in practicing of task-performing and self-entertaining audiovisual-stimuli-conditioned

eyes exercises with a usage of “VerQAG” device.

The notion of "Quasi-Anaglyph Graphic" applies to "Quads-art" imagery graphic components which are intended to be manipulable with a usage of mobile application functions basically allowing -

(2) targeting to stimulate brainwaves-activating along with neurotransmitters-boosting or inhibiting, if needed, to desirable patterns.

“Bi-Visual Fluctuations Perception” phenomenon, as expected, results in effect of arousing of so called “virtually-perceived extremely-low-energy brainwaves of infrasound and ultrasound bandwidth” in targeted zone(s) of left and right brain-hemispheres.

“VerQAG”

is basically to be used for employing on visual-stimuli-training mobile applications designed-

1)

to cause specific visual perception effect of “Bi-Visual Fluctuations Perception”,

2) to cause chromo-therapeutic effect of “Anaglyph Color (Chromo)-Synesthesia”,

3)

to stimulate developing the abilities of natural 3D-effect-visioning,

4)

to stimulate developing the ability of having experiencing vivid like-second-life-enriched dreams aroused with pleasant sensations and feelings while sleeping.

The simplified technological prototype-pattern of “VerQAG” headset was initially work-up-embodied already-existing visioning device modified and equipped with specifically functioning red-cyan pair of detachable lenses.

The pair of lenses or color-filters might be changed for yellow-cyan and red-green pairs as needed according to the needs of self-experimenting and self-practicing visioning programs targeted to create desired meditative self-hypnosis state of mind and altered states of consciousness together with experiencing increased mental processing, happiness, better perception of reality, incredible focus, better self-control and richer sensory experience and developing particular extraordinary abilities and to cultivate cognitive, linguistic and creative skills by practicing author-developed “know-how” audiovisual mindfulness-training.

The pair of lenses or color-filters might be changed for yellow-cyan and red-green pairs as needed according to the needs of self-experimenting and self-practicing visioning programs targeted to create desired meditative self-hypnosis state of mind and altered states of consciousness together with experiencing increased mental processing, happiness, better perception of reality, incredible focus, better self-control and richer sensory experience and developing particular extraordinary abilities and to cultivate cognitive, linguistic and creative skills by practicing author-developed “know-how” audiovisual mindfulness-training.

The audiovisual mindfulness-training effectiveness is conditioned on simultaneously viewing “Quads-art” graphic and listening to specifically composed meta-soundtrack with a usage of headphones that all together creates a harmonically perceived audiovisual-environment immersion. The musical composition of meta-soundtrack may vary depending on “Quads-art” imagery plot that might be changed according to the user’s preferences.

The meta-soundtracks might be composed with inserting “hypersonic sounds” into it. The above human hearing, like for instance a squeal of bats (about 20,000 Hz and higher) hypersonic sounds or ultrasounds may affect the acoustic perception of audible sounds and might be serving to modulate the musical composition included in a meta-soundtrack composed with applying musical instruments. The frequency-bandwidth of musical instruments applied should correlate with a targeting frequencies and their harmonics supposed to be caused by both “Anaglyph-Sync” and “Uni-Sync” visioning effects.

The meta-soundtracks might be composed with inserting “hypersonic sounds” into it. The above human hearing, like for instance a squeal of bats (about 20,000 Hz and higher) hypersonic sounds or ultrasounds may affect the acoustic perception of audible sounds and might be serving to modulate the musical composition included in a meta-soundtrack composed with applying musical instruments. The frequency-bandwidth of musical instruments applied should correlate with a targeting frequencies and their harmonics supposed to be caused by both “Anaglyph-Sync” and “Uni-Sync” visioning effects.

The

basic musical instruments are the piano (25 Hz to 4,100 Hz) and the guitar (83-880 Hz).

Additionally, the followings musical instruments sounds might be also

successfully used:

Bass drums - as low as 30 Hz

Bass guitar - 30 Hz to 200 Hz

Bass tuba - 44-349 Hz

Cello

- 66-987 Hz

Trombone

- 83-493 Hz

French horn - 110-880 Hz

Trumpet - 165-987 Hz

Clarinet - 165-1567 Hz

Violin - 196-3,136 Hz

Flute - 262-3,349 Hz

Cymbals - up to 15,000 Hz

The

isochronic tones may also be included into meta-soundtrack to be switching on-off via predictable patterns aiming at creating a pulse affect on correlated rectangular pulse currents caused by “Bi-Visual Fluctuations Perception” that amplifies therapeutic effect of audiovisual stimulation training program.

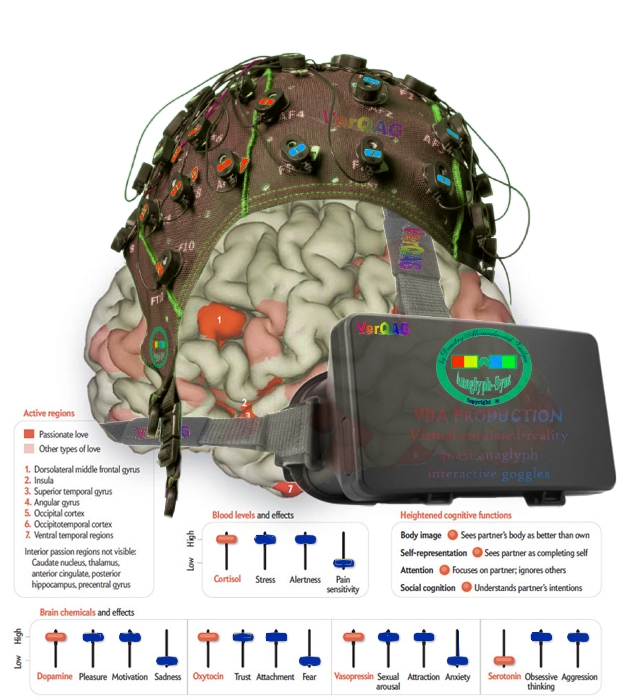

The consumer-oriented-release of “VerQAG” may be employed with a head-mounted brain-control-interface set (“VerQAG-Helmet”) applicable in cognitive psychology and behavioral neuroscience.

"VerQAG-Helmet" Set:

EEG-TMS emotion and sensation equalizer brain control feedback interface

VIRTUALLY EMULATED REALITY BRAIN

CONTROL INTERFACE is a head-mounted set (helmet)

that incorporates built-in sound-effect-filters headphones, EEG-cap and portable

TMS-device, interaction firmware electronics for mobile application and computer program (henceforth

called correspondingly “VERA-CITy-App” and “VERA-CITy-Com”); and that is combined with touch-screen-control

handheld device (henceforth called “Knack

Touch” Communicator) and “VerQAG”

visioning device.

VIRTUALLY EMULATED REALITY BRAIN CONTROL INTERFACE is a head-mounted set (helmet)

that incorporates built-in sound-effect-filters headphones, EEG-cap and portable TMS-device, interaction firmware electronics for mobile application and computer program (henceforth called correspondingly “VERA-CITy-App” and “VERA-CITy-Com”); and that is combined with touch-screen-control handheld device (henceforth called “Knack Touch” Communicator) and “VerQAG” visioning device.

“VERA-CITy-App”

and “VERA-CITy-Com” interaction firmware allows accessing the server of

“Virtually Emulated Reality of Artificial-Cognitive Intelligence Type System” that

facilitates simple and independent data acquisition from multiple devices over “Intelligence Computing Optimization Network”

that unites multi-users of “VerQAG-Helmet”.

The

built-in left-right headphone “know-how” sound-effects filtering functionality

is conditioned on employing firmware of immersive 3D-audio-surround-sound

effects configured for programming

playing different tones into right and left user’s ears to produce brain-wave

entertainment soundtrack that includes isochronic beats to match the pulse-frequencies

conditioned on the cause of “Anaglyph-Sync” and “Uni-Sync” visual perception effects.

1. Electroencephalograph-TMS Emotion and Sensation Equalizer Brain Control Feedback Interface

The EEG wireless bio-signal

brainwaves acquisition-controlling system with active electrode technology

integrated together with portative TMS-device into “VerQAG-Helmet” as “EEG-TMS-feedback” basically aims for the followings:

-EEG-measuring small voltage changes and EEG-monitoring activity of neurons in certain zones (areas) of cortex to

identify and respond to the user's brainwave activity correlated to the user's

actions and movements in real time;

-

mapping of certain nerve impulses corresponding certain sensations and motor

impulses corresponding concrete muscle contractions that allows the correct and

stimulate user’s sensations and actions in the virtual reality to the desirable

patterns;

-employing

HD- audible technology that allows monitoring targeted audio signals higher

than 48 kHz, which is outside of the hearing range of any human ear, and/or

higher than 16-bit linear bit depth and convert them, been previously

filtered, into vibrations that

can represent a certain human action and giving a sense of motion allowing

adjusting the intensity of such vibrations and filtering out a certain (newly

targeted) left or right sound-channel frequencies;

- monitoring audio-video

signals and using electroencephalogram actuator to convert them into

brain-waves impulses that are responsible for representing user’s targeted

emotion and sensation cues;

- non-invasively TMS brain-stimulating

feedback producing pulse low current that allows correcting brainwaves to

desirable pattern in order to cause anticipated user’s altering states of mind.

2.

“Knack Touch” Communicator

“Knack

Touch” Communicator is a haptic gesture

and touch-screen fingers control feedback interacting wireless handheld device that incorporates tactile sensors to

measure forces exerted by the user’s thumbs on the touch-screen interface been

installed at the front side of the device and four sensors relating to each

finger of the user’s palm been installed at the back side of device that is

referring to the production of variable

forces (sensations) and interacting with a surface allowing the creation of

carefully controlled virtual objects of virtual world environment and the

control of more than 10 parameters simultaneously with both user’s hands taking

full control over the construction of virtual reality environment scenes.

Touch-screen

feedback surface simulates different kinds of tactile sensations on the user’s

right and left thumbs by means of high-speed vibrations and other stimuli (not

in focus of this publication to be detailed).

“Knack

Touch” Communicator tactile 3D-Imaging

translates the pressure of the user’s right and left thumbs into a signal that

affects concrete chosen digital image on the scene to change its spatial

virtual coordinates imitating user’s ability of object control into virtual

reality environment.

As

it is planed “Knack Touch” Communicator

might be designed employing the

technologies aimed to give the user tactile cues for gestures, invisible interfaces,

textures, and virtual objects such as ultrasound and non-contact feedback

through the use of air vortexes caused by user’s hands manipulative movements.

3. “VERA-CITy” Mobile Application and Computer Program

The basic interactive functions of system components (end-user’s devices) such as “Virtually emulated reality quasi-anaglyph goggles” and “Electroencephalograph-TMS Emotion and Sensation Equalizer Brain Control Feedback Interface” Helmet are available with the usage of relevant mobile application and computer program (software), subjected for patenting or copyright under correspondingly working-names of “VERA-CITy-App” and “VERA-CITy-Com”. Both “VERA-CITy-App” and “VERA-CITy-Com”, subjects of trade-mark application pending, are to be integrated into “Virtually Emulated Reality of Artificial-Cognitive Intelligence Type System” as the implementation of “Intelligence Computing Optimization Network” API that provides adapting software and hardware to the user’s environment, and thus to be called under the common name of “VERA-CITy App-Soft” (subject of trade-mark pending application).

The

simplified representation of “VERA-CITy-App” design illustrated below depicts

only basic user’s voice-command interacting functionalities (buttons).

The

“VerQAG-Helmet” and computer, and Smartphone interaction accessing the cloud

computing cross-platform infrastructure is conditioned on using Bluetooth, Wi-Fi

(Mobile Hotspot) or alternatively USB-Tethering, technologies.

The

basic intuitively-understood functionalities of “VERA-CITy-App” allow user to manipulate

and operate objects on the display by changing alignment, size, colors, spatial

dimension, and performing series of activities. One

of the specific functionalities of “VERA-CITy-App”

is a voice-command feedback.

The

specific enhanced interactive functions of “VERA-CITy-App” are available on

condition of working together with relevant computer software (program),

subject of patenting under “VERA-CITy-Com” working-name, a trade-mark that basically

allows user:

- to load personal photos and

images in PNG, GIF, TGA, etc. formats from personal computer, mobile gadgets and popular social networks profiles (Facebook, Twitter,

Google +, etc.) in order to use them as “making self-personages in

episode-actions” as desired;

- to change episode and plot events according with personal preferences as well as to create their-self

“spin-off” animation stories.

- to cut background of personal photos and insert them into the scenes as a separate objects in action;

-to edit storyboard virtual

reality screening frames;

-to create end-user’s profile

loading photos and images (background cutting and

anaglyph-converting options are available);

- to insert

“self-hero-personages” (basic movements animating option is available);

- to choose, pick up and

manipulate with various media;

-to generate various brainwave-entertainment meta-soundtracks on the user’s choice.

THEORY-BASED PREDICTIONS & PERSPECTIVES

Depending on which statistic data should be taken in account and which “authority” must be listened and trusted to, there are quite many science-proven reasons why modern 3D-movies and Virtual Reality technologies have unacceptable side-effects that start from having a dizziness and discomfort, continuing with headaches and nausea, and end up with a suffering from certain psychological problems instead of treating them.

The present publication written in behalf of the "Author" is a part of a manuscript adapted for reading-in-annotation at this web-site. The full version of manuscript is available at request. The Author confirms being the sole contributor of this work approved for publication exclusively at this web-site.The Author declares that his theoretical research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References:

File number: SR 1-845123021,Registered eCO on 11/1/2012,U.S. Copyright Office,Attn: Public Information Office-LM401,Certificate of Registration: TXu 1-886-214,Effective date of registration: December 3, 2012

[2].Anaglyph Dreams Inside Out Scripting (ADIOS)/Experiencing Live Dreaming Inside Out (ELDIO):

Certificate of Registration: TXu 1-861-293,Effective date of registration: December 3, 2012,U.S. Copyright Office, eCO Registration No. SR 1-849955616, dated on 11/13/2012,U.S. Copyright Office, eCO Registration No. SR 1-849955616, dated on 11/13/2012,File number: SR 1-845123021,Registered eCO on 11/1/2012.

[2].Anaglyph Dreams Inside Out Scripting (ADIOS)/Experiencing Live Dreaming Inside Out (ELDIO):

Certificate of Registration: TXu 1-861-293,Effective date of registration: December 3, 2012,U.S. Copyright Office, eCO Registration No. SR 1-849955616, dated on 11/13/2012,U.S. Copyright Office, eCO Registration No. SR 1-849955616, dated on 11/13/2012,File number: SR 1-845123021,Registered eCO on 11/1/2012.

No comments:

Post a Comment